Kolja

-

-

-

-

-

Adventure Gam Jam 2023

I took a two-week break from my current game project (“Twin Towers”), to experiment with a few ideas for my submission to Adventure Game Jam 2023 on itch.io. Just barely made the deadline 😀 My ongoing adventure game project is eating up a ton of time, even though it isn’t even particularly large in scope. I am thinking a lot about ways to tell stories about Amy’s world in smaller…

-

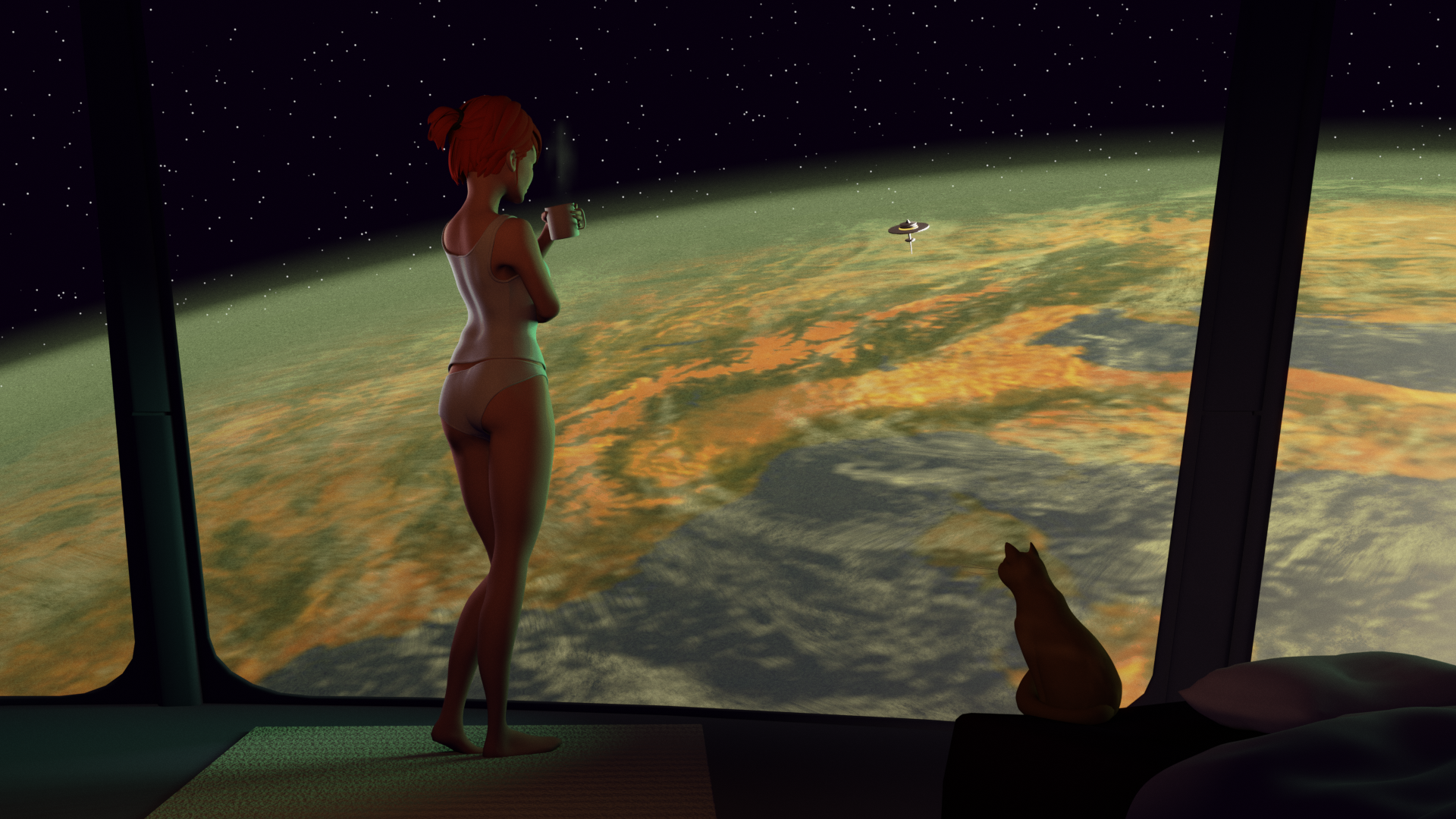

Amy Shifts Gears

- Playable Demo

- UE5 / C++ / Blueprint

- 3D

- Blender / Grease Pencil

- Low poly stylized look

- Niagara particles

- Lua-based story backend

-

Amy’s Prologue

Yay, I did it! As part of the Narrative Game Jam #5 on itch.io, there is now a playable prologue for my upcoming game. It is still very rough – a game jam submission after all – but a big milestone for me: the first public artifact introducing the game world. And there’s finally a title for the project, too. Give it a spin, it’s a click-through story that should…

-

Stickers!

Apart from gamedev activities, I am still drawing! This year’s inktober project finally turned into something tangible: animal stickers! They are available in my brand new shop on Redbubble, check them out!

-

Unreal Adventures

Having done some smaller games, it is time to start something bigger and better! I am currently working on an action adventure title using Unreal Engine, involving a slightly nerdy fox lady named Amy, in a world populated by animals and robots in peaceful coexistence. There’s an epic story, of course, which I already had to divide into three parts to arrive at a manageable project size. At the time…

-

Tiny game #7: Ascent – my first game jam!

This is my submission for the GameDev.tv 2022 10-day Game Jam – first game jam ever. It’s been intense, but totally worth it! You can download a Windows executable (and the Unity source code, if you’re interested) at the above link, or play the game in your web browser by clicking on the image below: click on the image to open the game in a new window – webgl-enabled browser…